Beyond Binary Causality

Maturing the scientific method from Enlightenment into Singularity

Science methodology is like joining a video call during a pandemic: you think everything is polished and tidy until you see what’s happening in person. Science methodology is not purely a function of what data and analysis will best address the hypothesis. Feasibility and ease to accomplish in the context of day-to-day life have always been key constraints. When the #overlyhonestmethods hashtag went viral in 2013 it was like a collective sigh of relief was emitted from labs around the world - we were all equally guilty and in this collective recognition we felt less like hypocrites.

But really, science methodology has always been constrained by the feasibility of making observations, collecting data, and analyzing results. Even though the scientific method is taught as a standardized approach that merely adapts but does not change over time…well that’s just not true. The scientific method has changed greatly over the last 2500 years, driven by changes in the tools available for observation and analysis.

Aristotelianism: experiment-free science

Aristotle is commonly referenced as the progenitor of Western-based scientific methodology. Widely recognized in his own time, then baked into university curricula beginning in the early 1300s, Aristotle's approach to science was the de-facto approach to science and the reference point for debate on methodology for nearly two millennia.

In Aristotle’s lifetime, the tools available for biological observation were generally limited to the human senses. He dedicated significant time to systematically observing and describing plants and animals (though little of his botanical work survived to the modern day), noted patterns common to groups, and inferred possible causal explanations.

This was Aristotle’s scientific methodology: begin with observations, then use inductive reasoning to identify a general principle. At this point, you are at a very high risk of false conclusions from limited information (analogous to sampling bias that modern statistics attempt to sniff out). To reduce that risk, you then make a deduction from the general principle that you can cross-check against further observations.

To make this concrete, imagine that you eat a habanero, a jalapeño, and a scotch bonnet pepper. Each pepper makes your mouth burn, so your observations lead you to the general principle that chile peppers make your mouth burn. Next, you deduce that, since bell peppers are a type of chile pepper, eating a bell pepper will also make your mouth burn. In the name of science you tempt the fate of your mouth one more time and eat a bell pepper. You discover that your mouth does not burn after eating the bell pepper, and conclude that the general principle was incorrect.

This approach lacks a true experiment, but it enables a significant body of knowledge to be accumulated. In fact, this process is still commonly employed in the early exploration of new methods. Recall the numerous studies of the 2000s in which genome after genome was sequenced, hoping to uncover potential associations with diseases or features of species.

Enlightenment: empiricism and experiments

Starting around the mid-1500s, the resources available to scientists rapidly changed; advances in tooling, mathematics, and philosophy transformed the nature of scientific investigation.

At the foundation was the standardization of measurement. Rather than relying solely on human senses for observation, the development of chronometers, thermometers, and standard units of measure such as mass and length made observations consistent from scientist to scientist. This ability to precisely and accurately measure conditions ushered in a new possibility: experimentation, where conditions could be consistently changed and the effects of these changes were reproducible.

Astronomy was the first field to be radically impacted. The combination of the sextant and chronometer transformed astronomy into the first modern science in which long-held beliefs could be rejected based on new evidence. Aristotelian methodology came under increasing scrutiny(1) and was even rejected entirely by Francis Bacon, who championed the concept of finding truth by disproving alternatives.

The concurrent development of mathematical frameworks brought another revolutionary change: quantitative analysis and statistics. Statistics and probability were new tools against the old problem of bias, while normal distribution became a framework for understanding variability in biological systems.

And of course, the microscope. The tool that discovered the cell and kicked off the long march into molecular biology. Like any new frontier, the early focus was qualitative and observational, yet the microscope revealed an entirely new system of mechanisms underlying the known systems of tissues, organs, and organisms.

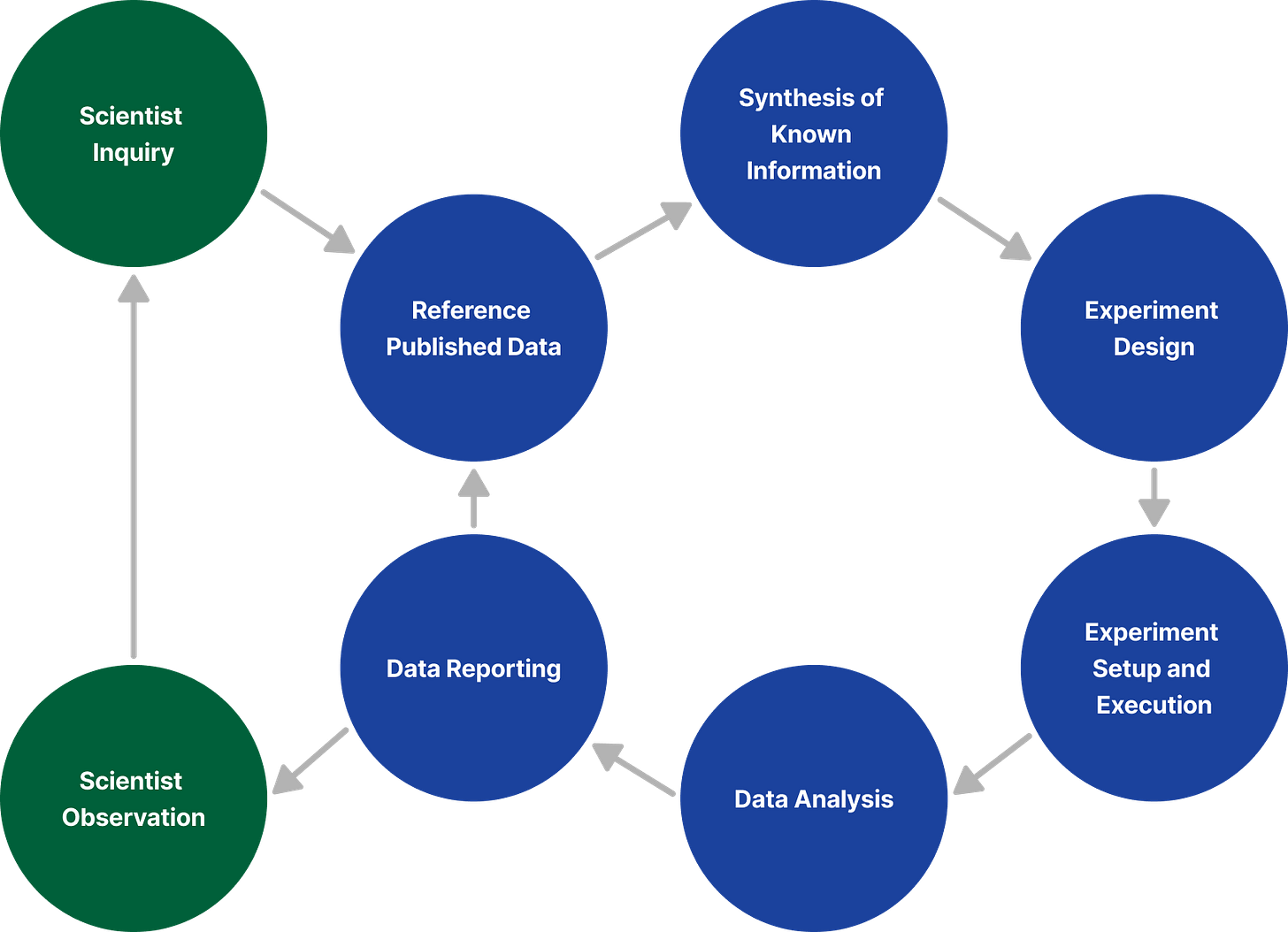

The newfound capabilities of precise measurement and mathematical analysis combined with the adoption of experimentalism created a fundamental necessity for a new scientific method based on elucidating causality. Observations lead to questions, posed as a null and alternate hypothesis (representing no causality and a causal link, respectively). Experiments are designed to control as many variables as possible - ideally all of them except the causal link being explored. Data is generated, analyzed with statistics, and conclusions are reported to the broader scientific community.

This approach has endured remarkably across multiple revolutions in biological understanding. Cell theory and germ theory; evolution by natural selection; DNA; protein synthesis; epigenetics; and genetic engineering by restriction enzymes, viruses, CRISPR just to name a few.

The end of an era

For all of our discoveries, the cracks in the approach are showing. You’re already familiar with them: off-target effects of drugs, the delays in discovering modulatory mechanisms relative to synthetic and signaling pathways…not to mention the common pattern in biology of declaring canonical pathways followed by decades of publications describing seemingly infinite exceptions.

A lot of this is due to limitations of feasibility for the volume and timing of data collection, processing, and analysis. Together these combined to a significant limitation of the number of data points evaluated. In turn, the standard implementation of the scientific method was to control every variable, attempting to achieve that only a single variable would be altered between experimental conditions. Controls were implemented but in truth provided false reassurance - we only controlled for what we already knew.

More limitations come from the reliance on reductionistic approaches to evaluate causality. Of course, the only effects discovered are those observed and measured, naturally leading to missed effects - true for all sciences. But specific to biology is the fact that biological systems are highly resilient: large disruptions in one system may shift homeostasis in a way that obscures the effect on other systems; in high-resiliency systems, only minimal effects can be observed despite being significantly disrupted from the ‘normal’ pathway.

Addressing implementation alone is not sufficient: there are structural limitations to the methodology of the current scientific method that are at odds with our modern understanding of biology.

As explained earlier, the current scientific method is intended to identify causality. More specifically, this methodology works best when the causal relationships are singular and unidirectional. That alone tells us that this approach is not sufficient for the study of biology because:

Biology isn’t a series of linear, unidirectional pathways.

Biological systems have evolved to maintain homeostasis in the face of change, and consequently tend to be highly resilient and highly redundant. A hallmark of biological systems is that the disruption of critical system components will frequently yield only small observable changes in a given metric.

Biological systems involve complex, multi-modal modulatory mechanisms that are extraordinarily difficult to observe through a framework of causality.

Welcome to singularity

Much like the development of standardized measurements and statistics ushered in the scientific method of the enlightenment, the development of automation technologies and AI will usher in the scientific method of singularity.

Automation technology radically transforms the scope of data collection that is feasible. Sampling can be performed at any time of day with impeccable consistency; the results can be used to maintain better control over environmental conditions, enable non-time-based endpoints, more efficient allocation of resources, and more.

Automation also enables the use of significantly smaller sample volumes and the ability to process more samples in parallel. This pairs beautifully with new chemistries that enable measurement of multiple endpoints in a single sample. Together this unlocks a new capability to capture orders of magnitude more data points. Monitoring of biological systems can begin to chase the dream of comprehensive data capture.

But with automation alone, we would have a massive data problem, or more specifically a glut of data without the bandwidth to fully convert the data into information. While software has done a lot to scale our ability to analyze data, only with AI to we fully unlock the ability to manage the varying structures and complexity of comprehensive data capture. Further, AI can enable a reduction in at least one form of human bias, our pesky tendency to only analyze associations that “make sense” to investigate (2).

An overwhelming quantity of data to process is not the only overwhelm that scientists face. Already the number of publications in many fields exceeds the ability of any individual to read every paper, and the number of publications per year is only rising. AI tools enable scientists to stay abreast of development in their fields and help ensure that papers warrant a deep dive are brought to their attention. The use of these tools allows researchers to remain informed in their primary fields as well as areas of cross-disciplinary knowledge. This provides a significant boost for innovation, as cross-disciplinary is critical for innovation(3).

How the scientific method will change in the context of singularity

As the frontiers of biology expand and exploration is fueled by the advancement of automation and AI (and particularly in the form of self-driving labs), there are some obvious changes coming to the scientific method. Clear lanes will emerge in terms of the role of the scientist versus the role of automation and the role of AI.

From an experimental perspective, constructive approaches, in which we attempt to build systems, will overtake the classical reductive approaches. With this the structure of hypotheses will change, rejecting the model of a single null and alternate hypotheses pair searching for causality in favor of exploring outcomes with greater sensitivity to modulatory effects. The very nature of this shift will expand the alternate hypotheses explored.

The changes in experimentation will be matched by changes in statistical analysis and reporting that go beyond binary causal links. Instead of seeking pure rejection of a hypothesis, approaches will be implemented that attempt to quantify and measure the probability of validity, in addition to those that assess the resiliency of the proposed model, resiliency of the biological system, and numerical-based assessments of modulatory impact rather than binary causal links.

To be clear, Aristotle’s approach had faced previous scrutiny, but those critiques did not diminish the dominance of his approach.

I cannot resist the opportunity to mention Roger Bacon’s four sources of error:

Unreliable and unsuited authorities

Customs or long-enduring habits

The opinion of the ignorant masses

Displaying pretense of knowledge to disguise ignorance