The Clickbait of AI for Biology

Why the Revolution is Still Waiting for Data

In spring 2025, 23andMe filed for bankruptcy, putting the company's assets, including the genetic information of approximately 15 million people, up for sale. The headlines were predictably dramatic. Privacy advocates warned of unprecedented risks to personal data, as commentators speculated about who would bid on the data.

As it turns out, almost no one wanted the data.

Regeneron initially emerged as the sole bidder, offering $256 million for the entire company, assets, genetic data, and all1. When I read news of the offer, my first thought was “that’s it?” Seven years ago, GlaxoSmithKline invested $300 million for four years of exclusive access to the same-ish dataset. The same dataset, in that they were getting access to 23andMe’s data, but with millions fewer data points and term-limited exclusivity.

Inflation has been on a tear since 2018 and that 300 million dollar investment in 2018 is worth about 384 million dollars in 2025. Regeneron effectively offered two-thirds as much to permanently own the data as GSK paid for just four years of access, despite the dataset now being larger and AI analysis far more sophisticated. Why, with more data and seven years of breakthroughs in AI-leveraged data analysis, is it worth so much less?

This is a reality check for overhyped headlines claiming that with the right AI algorithm, we can just analyze the existing datasets and revolutionize biology and healthcare. The truth is that we're nowhere near having the data we need to fulfill AI's most ambitious biological promises.

When Big Data isn’t Enough

The 23andMe dataset represents something rare in scale and scope. Of the 15 million customers who submitted samples, 80% opted in to giving consent for their data to be used for medical research. And it wasn’t just DNA data, many also submitted phenotypic and behavioral information2. That's ~12 million people who had already given consent for their genetic information to advance medical discovery, a dream scenario for large-scale genomic studies.

Many speculated that pharmaceutical companies and insurance companies would be lining up to submit their bids. While insurance companies were never likely bidders, it was reasonable to think that pharmaceutical companies would be interested. After all, they are the ones racing to leverage AI for drug discovery, and if there’s one thing that the headlines have made clear it’s that AI thrives on massive datasets.

GSK didn't submit a bid, but since they already had their turn with it, that wasn’t surprising. And then…nearly nobody else submitted a bid either. This collective indifference from an industry that routinely spends billions on far more speculative ventures speaks volumes about the utility of current genetic datasets.

The problem isn't just with 23andMe's specific approach, although the limitations are instructive. Instead of sequencing entire genomes3, 23andMe screened for single nucleotide polymorphisms (SNP, pronounced “snip”). SNPs are a type of genetic variation in which a single nucleic acid in the sequence is different

A SNP is considered "common" if it appears in at least 1% of people. While the human genome contains approximately 10 million common SNPs, 23andMe's most recent chip analyzed 640,000 SNPs. That’s just 6% of the total. From a drug development perspective, this creates the first major limitation: you're seeing only a small slice of one type of genetic variation.

The second limitation stems from FDA regulations. Since 1983, the FDA has offered substantial incentives for developing treatments for diseases with no existing therapies, including tax credits, fee waivers, grants, and seven years of market exclusivity. These "orphan disease" drugs have become a popular pathway to market, and often later companies pursue expanding the approval of the drug to treat more common conditions. This explains the lukewarm bidding interest: 23andMe's 6% of common SNPs coverage is unlikely to deliver the rare disease breakthroughs that justify major pharmaceutical investment.

Knowing What ≠ Knowing How

The pharmaceutical industry's tepid response to 23andMe reflects a deeper issue that's become apparent across genomics research: efforts to extract clinically useful information from DNA data have reached the area of diminishing returns. Despite exponentially growing datasets and increasingly sophisticated algorithms, the gap between genetic prediction and actionable medical insight remains stubbornly wide.

Huntington’s disease is a good example of the problem that arises when we can predict but lack a treatment option. DNA testing alone can identify who will develop this disease. Yet many people who know that they might have inherited Huntington's disease choose not to get tested. They don’t want an answer that they cannot do anything about.

This is a fundamental limitation in our current datasets: even perfect prediction without mechanistic understanding can't lead to effective interventions. Knowing that someone carries genetic risk factors for diabetes, heart disease, or Alzheimer's tells us remarkably little about how to prevent these conditions or what biological processes we should target for treatment.

While algorithms of increasing sophistication may be able to identify more diseases and conditions from DNA alone, the lack of mechanistic knowledge precludes that information from being useful. Interestingly, this is analogous to the notorious “black box” challenge of AI.

While appropriately trained AI can generate appropriate outputs, it’s generally unknown how the output was derived. For many applications, not knowing how the algorithm reaches its conclusions is okay, since you can verify if it is or is not correct. But in the case of biology this limitation is far more profound. Without understanding the biological mechanisms that connect genetic variants to disease outcomes, even the most accurate AI predictions remain therapeutic dead ends.

We find ourselves in this position in part because of the DNA-centric approach to all things biology for the last several decades. This isn't just a limitation of current datasets—it's a fundamental constraint of the DNA-centric approach that has dominated genomics. As I've mentioned before, our excessive focus on DNA as the master controller has systematically overlooked the intricate interplay of proteins, lipids, and carbohydrates. Metabolism, cellular dynamics, and environmental factors that actually determine biological outcomes have been relegated to a secondary role.

Our data reflects this bias.

Like Using Constellations for Interstellar Travel

For centuries, visible stars were used to enable navigation by land and sea. With the right adjustments for location and time of year, this was sufficient. But as we began to pursue off-planet travel, we needed to use fundamentally different tools for plotting our course. We are in a similar state with biology. The DNA-centric view has allowed us to traverse vast territory, but it’s not enough for where we’re going now.

To understand why current datasets fall so dramatically short of AI's promises, we need to grasp the sheer scale of biological complexity that exists between DNA and mature organisms (like humans, or trees, or mushrooms). DNA is just one component in a vast network of interacting molecular systems, each adding layers of combinatorial complexity that dwarf our current data collection capabilities.

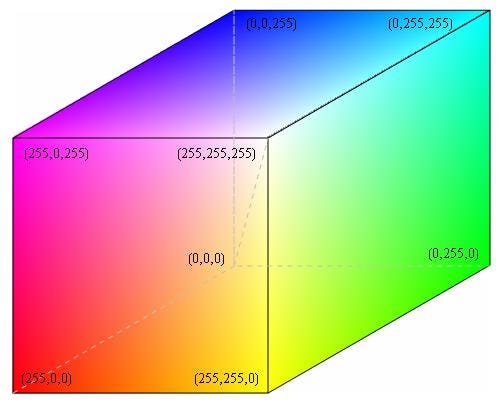

Let’s pause for a moment to dig into that phrase: combinatorial complexity. This is the complexity that arises when you consider all of the possible combinations, rather than the number of individual components. A great example of this is the color spectrum. Most people have three types of color receptors: red, green, and blue. The complete rainbow of colors we see is the result of different combinations of those colors, at varying intensities. The RGB cube below plots red, green, and blue on three different axes, each perpendicular to the other. All of this from just three individual components.

Coming back to cells, consider the DNA-centric path from gene to function: DNA codes for RNA, which codes for proteins, which interact with other proteins to form complexes, which operate within cellular networks, which coordinate across tissues, which function as integrated organ systems, which keep the organism alive.

At each level, the number of possible combinations expands exponentially. If DNA interactions involve thousands of variables, protein interactions involve millions, cellular networks involve billions, and organism-level integration involves numbers that strain comprehension.

And then you layer in the influence of proteins, carbohydrates, and lipids at each step in those processes. And then you layer in the reactions and cycles that form the cellular context. And then you layer in the influences of age, nutrition, and external environment.

This brings us back to 23andMe and why sophisticated pharmaceutical companies weren't willing to pay premium prices for what appeared to be a sizeable dataset with tons of paired data. They understand something that the headlines miss: there's a vast chasm between correlation and causation, between prediction and intervention, between data and understanding.

Even the most ambitious projects, such as attempts to create virtual cells that can simulate basic biological functions, have yet to achieve functional prototypes for even single-cell organisms with genomes that are 5% of the number of common SNPs in the human genome. The theory is strong, but the data is lacking.

You Cannot Understand an Orchestra Note by Note

You cannot understand an orchestra by studying the individual notes of individual instruments. Yet we are still trying to understand biology by focusing almost exclusively on individual molecules. We need more data, but not more of the same data.

Fundamentally, this is the result of the scientific method that has been popularized since the Enlightenment. Performing experiments manually imposes significant limitations in the number of conditions that can be monitored and the number of samples collected and analyzed. The scientific method that emerged during the Enlightenment was designed for this constraint, optimizing for clear causal relationships between isolated components.

But biological systems aren't just collections of isolated parts; they're dynamic, interconnected networks where the interactions between components often matter more than the components themselves. A protein’s function can change entirely depending on its cellular context, nearby molecules, and environmental conditions. Likewise, gene expression is shaped by factors like epigenetic modifications and cellular stress. These are complexities that traditional reductive methods often treat as noise, but are in fact essential to understanding biological mechanisms.

This is why we’ve hit diminishing returns from most DNA analysis; collecting more SNP data, analyzing larger cohorts, and applying more sophisticated algorithms to existing datasets can’t bridge the gap to meaningful biological understanding. We need data that is collected in ways that capture the integrated complexity of living systems. We need to listen to the entire orchestra.

Tools and Methods for Building with Biology

Here's where I step up onto my soapbox to remind you that the time has come in which we can shift this story from sobering realism to optimism. We have tools capable of observing the complexity of the systems we’re trying to understand. Tools capable of constructive rather than reductive approaches to biological research. Methodologies that can build and test integrated systems rather than just breaking them apart.

Automated science platforms and self-driving labs can make previously infeasible experiments routine. Instead of controlling for all but one variable, we can now simultaneously vary multiple parameters and measure their complex interactions.

These systems can run thousands of experimental conditions in parallel, collecting data 24/7 with consistency and precision. With this, we can realize constructive methodologies by designing and testing integrated biological systems rather than just analyzing isolated components. We can finally begin to study biology the way it actually works: as dynamic, interconnected systems where emergent properties arise from complex interactions.

But, perhaps most importantly, these automated systems can use AI not only to analyze data, but to optimize data collection itself. Self-driving labs can identify which experiments are most likely to fill critical knowledge gaps and which parameter combinations are most informative. This creates a feedback loop where AI helps design better experiments, which generate better data, which enables better AI models—a compounding effect that could dramatically accelerate the pace of biological discovery.

This isn't about doing existing research faster; it's about making new categories of research possible. We can systematically explore the vast parameter spaces that define biological systems, collecting the kind of comprehensive, systems-level data that AI actually needs to make meaningful predictions about human biology.

It’s Time to Build a Taller Giant

"If I have seen further, it is by standing on the shoulders of giants”

- Sir Isaac Newton

AI evangelists describe a future in which we can predict and control biological systems with unprecedented precision. It isn't science fiction, but it also isn't going to emerge from analyzing existing datasets. It's going to require building entirely new categories of data through methodological approaches that match the complexity of the systems we're trying to understand.

It’s important to be realistic about the timeline and scope of what's required. This is a fundamental research infrastructure challenge that will require sustained investment and coordinated effort across the research communities. But for the first time in scientific history, we actually know how to get there if we're willing to do the work.

Let’s build a taller giant.

Since then the court has approved a bid by the TTAM Research Institute, a nonprofit established by 23andMe co-founder Anne Wojcicki, for $305 million. As of yet it’s not known who funded the TTAM Research Institute bid, nor what it’s ongoing financial strategy will be.

This is how 23andMe were able to build dashboards that told you things like whether you were particularly likely to be afraid of heights, and what time you likely woke up at in the morning.

Whole genome sequencing remains too expensive for consumer price points today, and was even more expensive when 23andMe started in 2006

Just how close are we to autonomous wet labs? What are they capable of today?

Really enjoyed your piece Kennedy! Incredibly well written and great points! A huge flaw that we can fall prey to as scientists is to focus solely on a specific topic like DNA, while forgetting the larger picture. This larger picture is where most of the biologically significant events happen and it's important to keep in mind for drug discovery.